This navigation system is based on structures inspired by neurons involved in navigation in mammals, namely place cells, grid cells and head orientation cells.

Originally, this model was designed for the conception of a navigation assistance system for visually impaired people. It had to meet certain constraints: indoor and outdoor navigation, measurement of displacements in all directions, reduced field of view (no panoramic cameras), no absolute odometry, while remaining low on resources, and therefore, on energy. After testing visual SLAM models, we turned to biologically inspired approaches. Currently, other applications are studied, such as robotics, or even the guidance of a robotic swarm, made possible by the decentralized aspect of the environment model.

Description of the model:

This navigation model is based on the joint use of structures inspired by certain types of neurons involved in navigation in mammals. Its robustness mainly comes first from its predictive aspect, by estimating the possible observations around a given position, and secondly by the sequential aspect of the environment model, which allows to compensate a low number of visual cues. This model is described in our MDPI article.

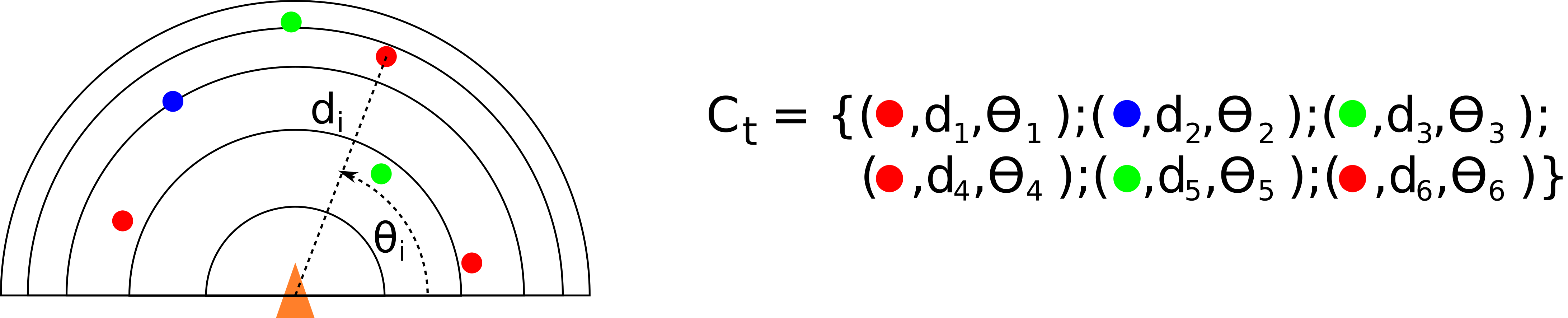

The model requires a sensory system that can recognize and localize distinctive elements in ego-centric space (stereovision system, lidar, optical flow...). The distinctive elements can be varied (simple color, object or pattern recognition, vertical line detection...). Ideally, the field of view should approach 180°, although 90° is theoretically sufficient.

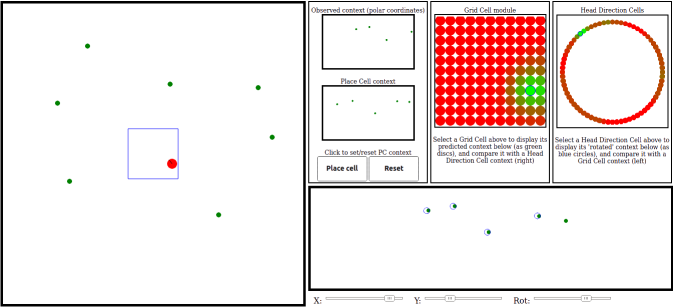

Example of perception system. Here, points of interest are defined by their color, and localized in polar coordinates.

- Place cells are structures characterizing a position in the environment, by recording the context observed at that position. Neighbor cells are connected together to form an easily exploitable navigation graph. The place cells use this recorded context to recognize already visited places.

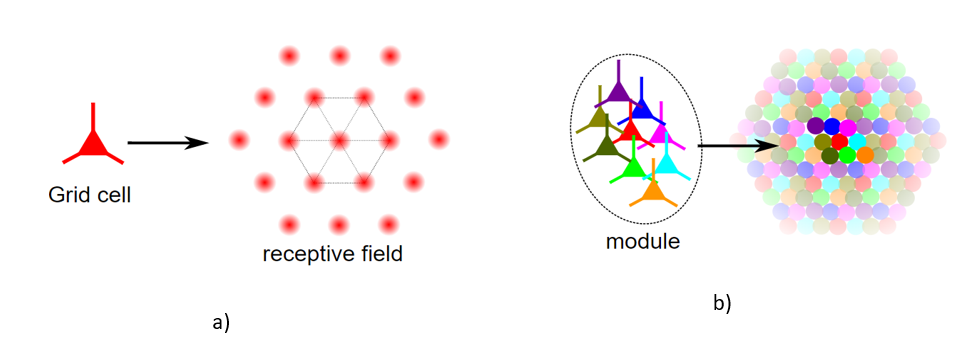

- Grid cells are neurons mainly involved in path integration. Their functioning is very particular: their receptive fields (the regions of space where they are activated) form the vertices of a regular hexagonal grid. They are gathered in groups, called modules. The grid cells of the same module form 'grids' of identical size and orientation, but with different offsets, allowing to cover the whole space with a small toroidal surface that repeats indefinitely.

(a) Grid cells have a receptive field forming a hexagonal grid. (b) A module allows characterizing the whole space.

In our model, a module contains a set of grid cells. The spacing between the cells defines a displacement in space. As the module has a toroidal shape, we can associate a location cell with an arbitrarily chosen grid cell. This grid cell then becomes the 'center' of the module. By retrieving the context of the place cell, and the position relative to the center, each grid cell generates the expected context at the position it characterizes, relative to the place cell position.

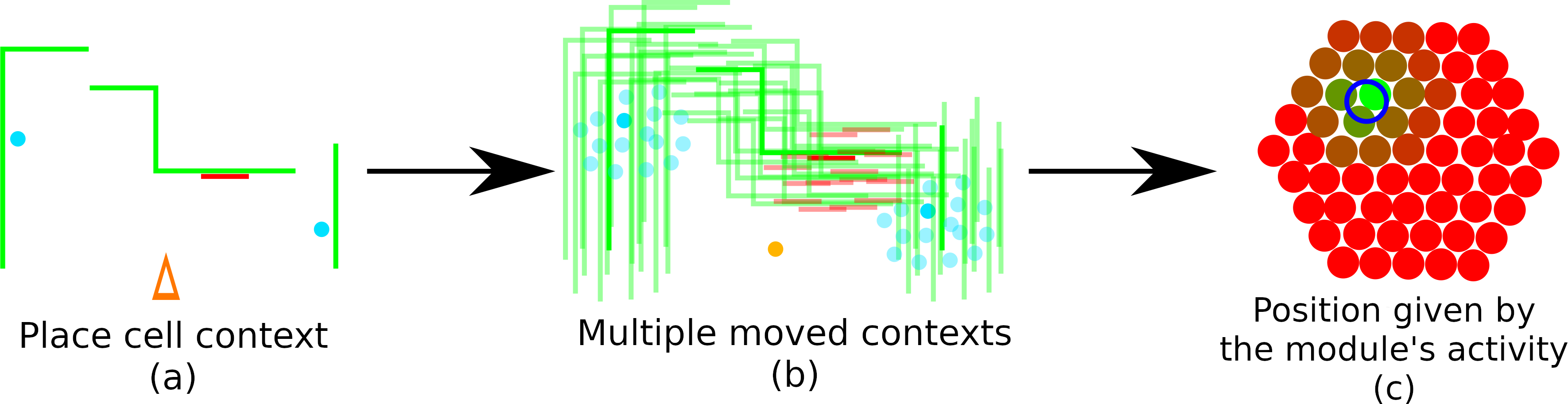

Localisation principle: grid cells use the place cell context (a) to define predictions around this place cell (b). The module activity allows defining the position around the place cell.

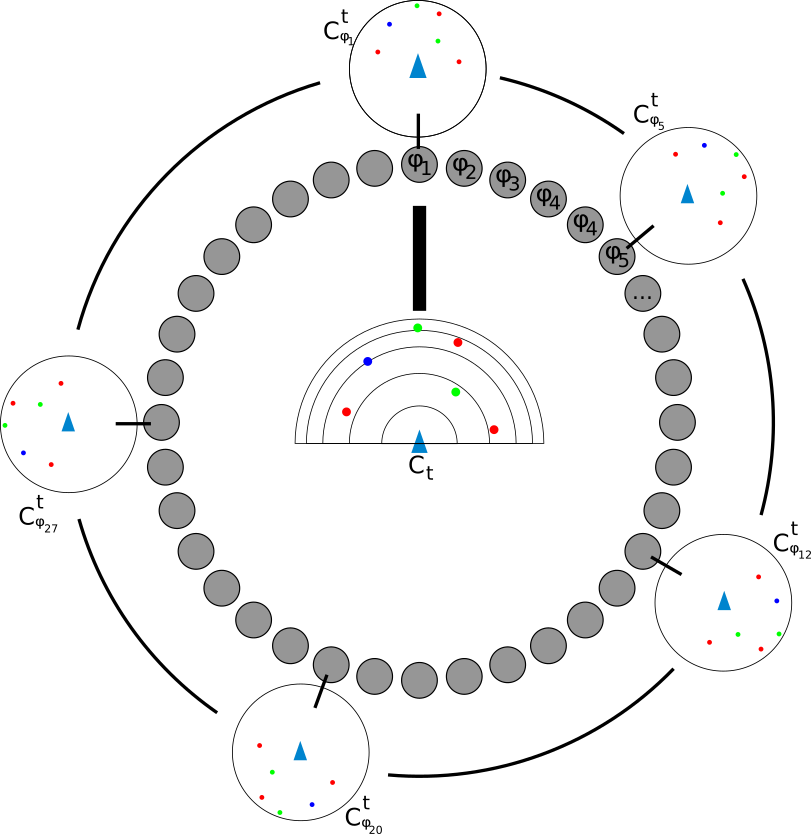

- Head Direction cells are neurons that are activated when the head is oriented in a specific position. In our model, head direction cells are associated with a predefined orientation angle, uniformly covering a full turn (360°). Each orientation cell computes, from the observed context and its angle, a 'rotated' context. Thus, by comparing these rotated contexts with the predicted contexts of grid cells, we can define the orientation cell-grid cell couple with the highest similarity, giving the precise position and orientation around the active place cell.

Head Direction Cells generate versions of the observed context with different orientations.

The interactive demo below shows a simplified implementation of this principle of locating around a place cell: place distinctive points in the environment (all of the same type), activate a place cell, and then move the agent to observe the activity of the grid and orientation cells. You can also observe the context of each cell and compare them.

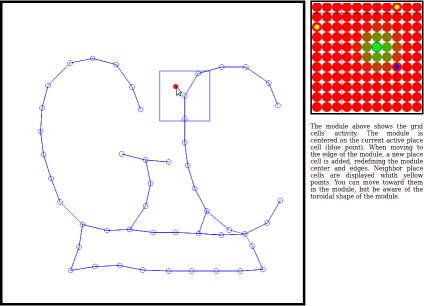

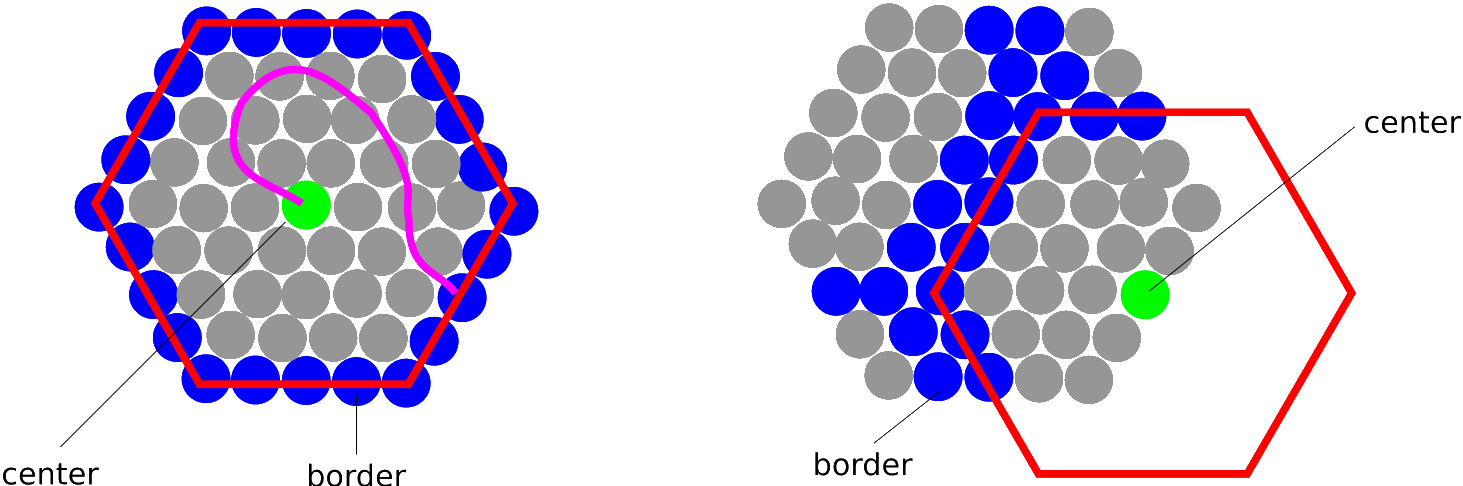

Of course, this principle limits the localization to the surface covered by the module. To continue tracking position, the system relies on the following principle: when defining the center of the module, the set of ‘border’ cells are also defined. When we reach one of these ‘border’ cells, a new place cell is created and associated with this grid cell. The grid cells then compute new predictions from the context recorded by this new place cell and the new center of the module, allowing to continue tracking.

Principle of place cell change and graph construction.

The interactive demo below illustrates this principle of navigation graph construction. Click in the environment, then slowly move around to observe the position on the module (here, we simply use the cursor position) and the place cell changes.

videos of experimentations:

Construction of the navigation graph (manual control). Top right: grid cells and head direction cells. Bottom right: place cell graph.

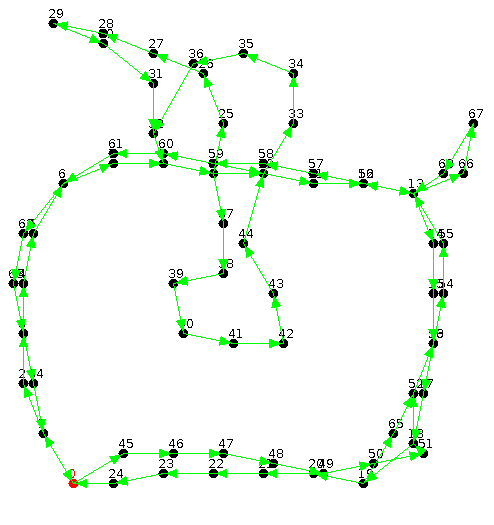

Navigation graph after environment exploration. We can note, in parts with few visual cues, sequences of "directional" place cells. The model thus compensates the lack of visual cues with the sequential aspect of the graph.

Autonomous navigation, following a sequence of place cells. The agent only uses its visual system to navigate (no measure of movements).

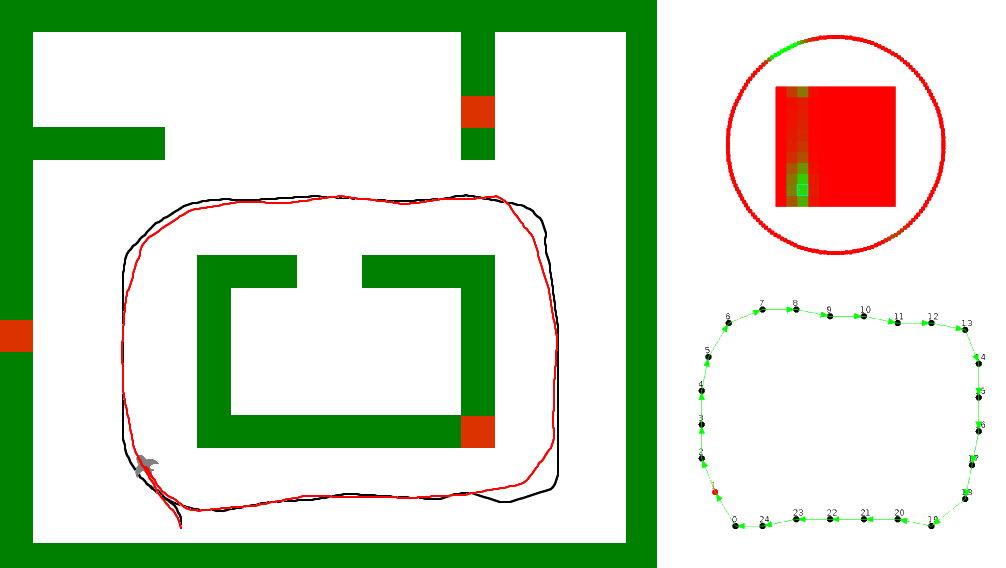

Comparison between the route performed with manual control to create a sequence of place cells (black line) and the route performed with automatic control following this sequence (red line)

Homing to start point, by following the reverse sequence of place cell (blue line). As the agent cannot see behind, it can only relies, for the return, on the estimated distance between place cells and on visual movement measures using new place cells. The guiding system allows a quite reliable path integration.

Tolerance to environmental changes: when modifying the environment, the guiding system still finds and recognizes landmarks to follow the place cell sequence defined in the initial environment.

Shortcut detection (green line): the agent can estimate the position of place cells around it, and move toward the place cells that can be reached in straight line (without obstable between it and the estimated position) that is the closest to the last cell of the sequence.