The sequential learning algorithms have shown their limits in open environments: the agent is unable to to notice that two different sequences of movement may lead to the same point in space (for example, turn left three time of 90° is equivalent of turning right once), and as it did not has object persistence, it stops pursuing a target of interest when the target was lost by the sensors.

Inspired by a region of vertebrate brains specialized in space representation, the tectum (also called colliculus in mammals), Olivier Georgeon implemented a space memory allowing an agent to localize elements in its surrounding environment. But this memory was based on a set of preconceptions (as the position of sensors in egocentric reference, the movements of of these elements and the associations of visual and tactile stimuli).

I made preliminary studies to develop mechanisms that allow an agent to learn this information. Three algorithms were developed:

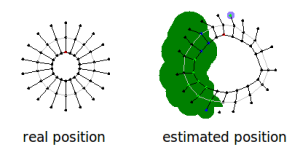

-The sensor mapping is used to learn the position of sensors and how they cover space. This algorithm considers every kind of sensors as a set of binary sensors we called points of perception. Each point is sensible to a specific value of the sensor range. The algorithm is based on the assumption that the distance between two of these points is proportional to the average delay between change of value of these two points. We tried this algorithm on an agent equipped with 18 short range sensors. Although the estimated structure is far to match the real one, the sensor distribution is recognizable.

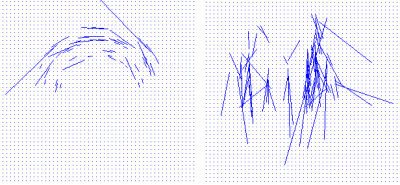

-The action mapping, that learn the movement of objects in the environment while the agent is acting. It consists in an optic flow algorithm applied to the set of perception points given by the sensor mapping. This algorithm was tested on a predefined structure of the visual system (5° angular resolution for a total span of 180°). Each pixel gives the distance of the detected point. Figure 2 shows the optic flow measured for the translation and the right turn (the agent is on the center of the vector field).

right rotation (left) and the translation (right)

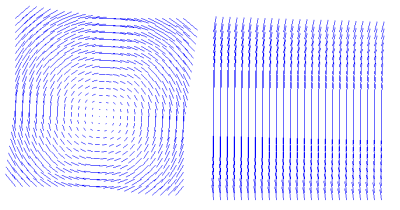

If we suppose that the agent knows that its movements are combination of translations and rotations, we can compute for each action the average translation and rotation coefficient. Figure 3 shows the vector fields given by applying these coefficients to the whole space memory.

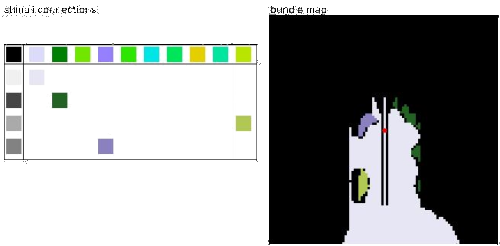

Now that we can determine the origins of stimuli and their movements, we can fill a map of stimuli around the agent. When the agent is moving, it complete its perception of its environment. Figure 4 shows the tactile and visual stimuli memorized in the space memory.

If the different sensor modalities are mapped together, stimuli from a same object will be stored on a same place of the stimuli map. We can thus link these stimuli to represent "objects" as they are perceived by the agent. Figure 5 shows such associations between visual and tactile stimuli the agent can recognize in its environment.

These algorithms were presented at the BRIMS 2012 conference (see Publications section).