This system is a real-world implementation of the bio-inspired navigation model developped during my postdoc at LITIS lab (Rouen). We used a stereo camera to generate the environmental context (Figure 1).

The principles of this system is described in detail in our article 'A bio-inspired model for robust navigation assistive devices'

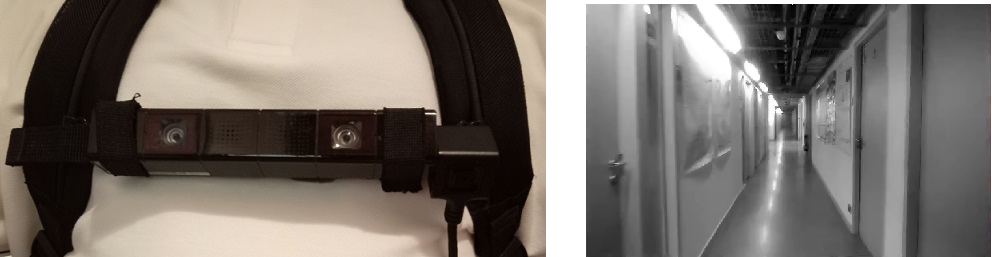

Figure 1: The camera is a modified Sony PS4 camera model 1.

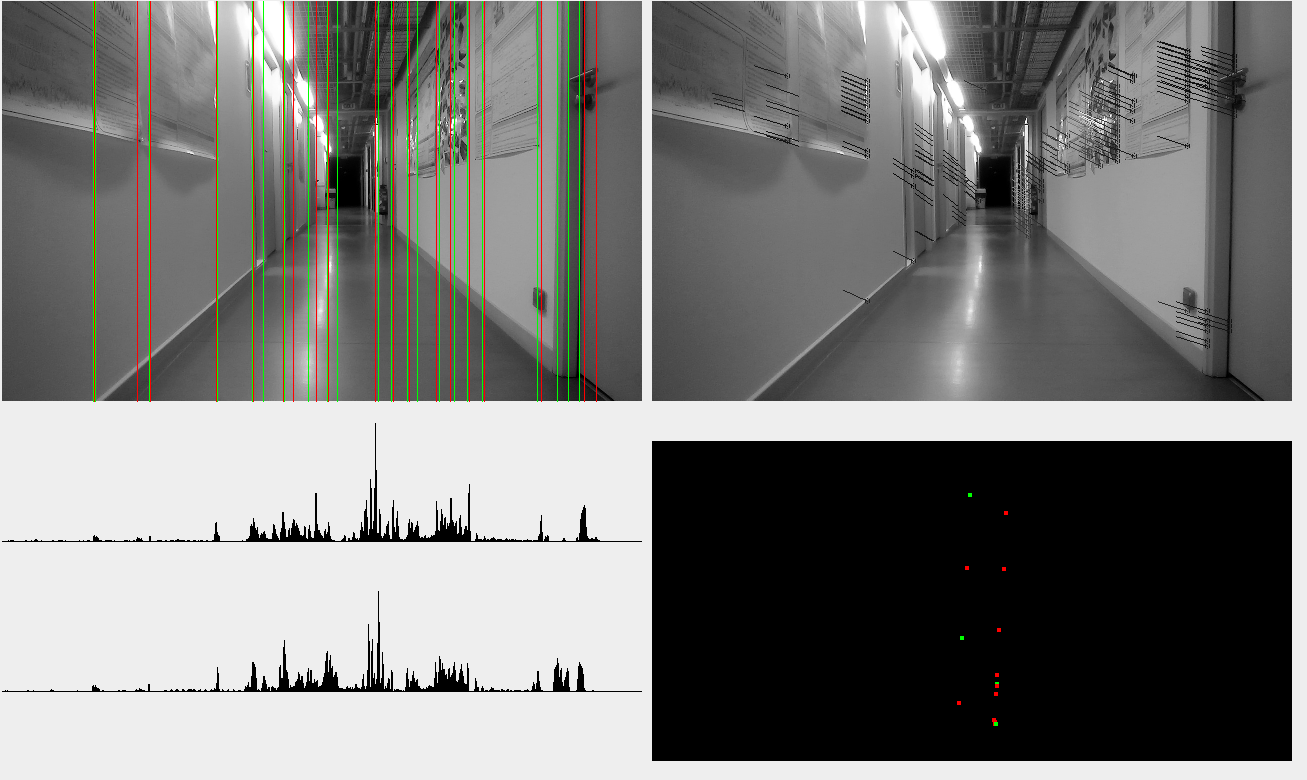

The visual system uses the disparity between images to detect and localize vertical lines in the scene. These lines are then projected on the navigation plane to generate the environmental context (Figure 2). We differentiate lines with positive and negative gradient, which helps recognizing the contexts.

Figure 2: The visual system detects vertical lines in the scene (top left) and uses the disparity between stereo images (top right) to detect and localize vertical lines in space. The lines are projected on navigation plane (bottom right) to define the environmental context.

With the camera mounted on a trolley, it is possible to map a path in an unknown environment. The system was tested in two environments.

Construction of a path in the corridor environment. Grid cells and head direction cells indicate the position and orientation around the current place cell. Place cells are mapped on the global grid (observation tool) allowing to observe the graph (blue points) and the trajectory (cyan line).

Construction of a path in the floor loop environment

It is then possible to perform the recorded path by following navigation instruction: each place cell gives the position of next place cell. By aligning the direction of the trolley with the direction of the next place cell, it is possible to perform the path.

following the path in corridor environment.

following the path in floor loop environment.

The use of a decentralized graph of local models makes the system robust to environment changes: the system only considers points of interest that are close to their expected positions in a local model. Thus, while a sufficient number of points of interest remains unchanged, the system is able to track the position. In the following video, several pieces of furnitures were added or shifted from previously recorded path.

Robustness of the model to environment changes. We added or shifted tables, chairs, coat hanger, and open several doors.

The local tracking system, using grid and head-direction cells, can track the movements of the camera in all directions, while the camera remains at grid cell module range (here, the module covers a square of 220x220cm). This feature makes possible to track a person in space.

Tracking movements in space: lateral movement of 60cm to the left of place cell's position (3 grid cells) then 40cm to the right of the place cell's position (2 GCs).

We also tested the system with the camera worn on the chest. Despite being not very tolerant to camera's tilt and roll, the system could track the position along the corridor path.

Figure 3: camera attached to a front strap of a bagpack.

Tracking a person with the camera worn on chest.

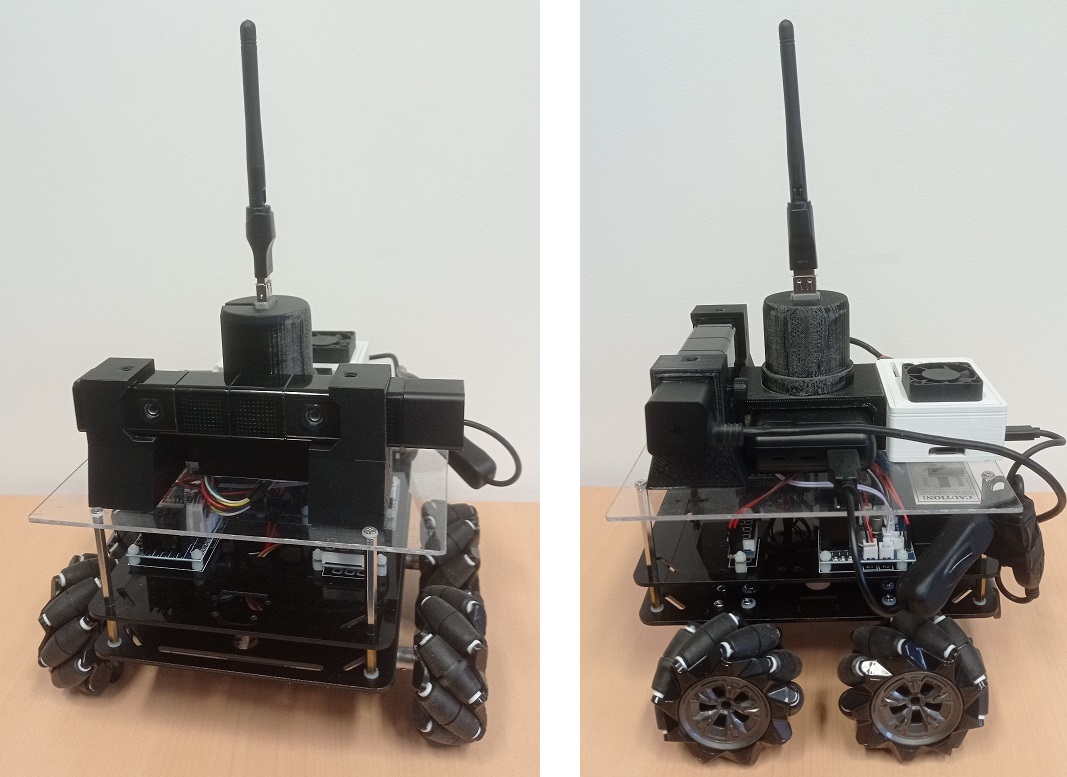

The navigation system was also tested on a mobile autonomous robot, equipped with a Banana-Pi M5 single-board computer. This test demonstrates the possibility to design embedded small and portative devices, but also the guidance possibilities of the model. Indeed, the robot moves toward the next place cell in the graph, alowing to perform the recorded path autonomously.

Figure 4: the robotic platform is based on a Osoyoo Mecanumm Wheel. The upper deck contains the stereo camera, a powerbank, a Banana Pi M5 single-board computer and a Wifi dongle. The lower deck contains an Arduino Mega board and two motor drivers controlling the four DC motors.

The robot follows the path previously recorded in the corridor environment with the trolley. Despite the height difference, the robot is still able to move through the corridor. It however losts the tracking when arriving in the middle room, where the point of view from the ground is too different to recognize the environment.