ErnestIRL Simulator

Description :

Like ErnestIRL, the model has a front contact sensor, a color pattern sensor beneath and a panoramic camera. Note that this camera is composed of a top view camera instead a system using spherical mirror, as BGE cannot simulate a mirror efficiently. The obtained image is sent in front of observation camera using a script that can be found here: www.tutorialsforblender3d.com.

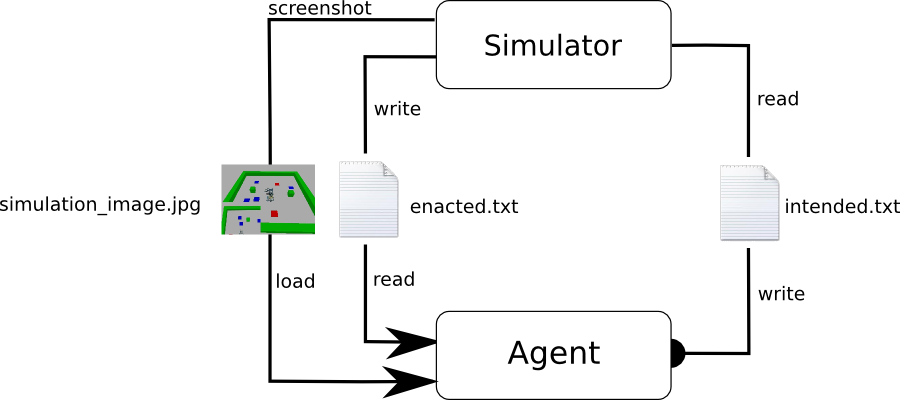

The simulator communicates with an external decisional mechanism through three files. This solution has the advantage of being simple, and being easy to use on different operating systems and with different programing languages. There is also a strict separation between the decisional system (the agent) and its environment, as the agent can only interact with its environment through interactions, respecting the principles of Radical Interactionism. The decision cycle starts with the agent that writes the symbol of an interaction in file

intention.txt, then waits while file enacted.txt is empty. The simulator waits until file intention.txt is empty, then, when it contains a symbol, reads it, erase file contains, and processes the interaction. At the end of the enaction cycle, the simulator write the symbol of the enacted interaction in file enacted.txt. The agent can then read this symbol, and, after erasing it from file, integrates the result of its intention.

simulation_image.jpg is generated at each decision cycle. The bottom left part can be used to define visual interactions.

Resources :

- Ernest_simulator.zip: archive containing the simulator Blender file, exchange files, and a python script (test_random.py) to test the simulator. Requires Blender 2.69 or above.

- version7_3_simu.zip : sources of decisional system 7.3 of Ernest, modified to be used with the simulator.

- spaceMemoryV7_3_simu_(1).txt : save file of signatures learned after 1500 decision cycles, for decisional system v7.3 simu.

Utilization

To install the simulator, just unzip it in a directory, then open

Ernest_simulation.blend file with Blender. The simulation is started by pressing 'P', and stopped by pressing 'Esc'. You can test the simulator with script test_random.py, by entering, from Ernest_simulator directory, command python test_random.py. If the robot starts to move, the simulator is ready.Commands :

It is possible to move elements of the environment, the camera and the robot during the simulation. This is the list of commands:

Manual control of the robot:

z : move forward of one step

s : move backward of one step

q : turn left

d : turn right

Camera controls (keypad):

translations

5 : front

2 : rear

1 : left

3 : right

/ : up

8 : down

rotations

4 : left

6 : right

* : up

9 : down

zoom : + and -

other

p : camera tracks the robot

Environment edition:

c : select the object to manipulate. The name of the selected object is displayed in terminal.

directional arrows : move selected object.

h : hide/display selected object.

Utilization with decisional mechanisms of Ernest

It is then possible to reproduce experiments conducted with ErnestIRL, with similar results. We can however note that signatures of interactions obtained with the simulator slightly differ from signatures obtained with ErnestIRL, mainly because of differences in their visual systems.

Learning: at the beginning, the agent is mainly driven by its learning mechanism that leads it to test its interactions and learn signatures. While signatures become reliable, the exploitation mechanism is more often used. After nearly 7 minutes, the agent begins to move toward food while avoiding walls. The learning mechanism is still used in less known contexts. After nearly 17 minutes, the behavior becomes very efficient.

Here, we test obstacle avoidance (learning mechanism is deactivated to do not interfere with emergent behaviors). When the agent is moving toward a prey, we put a wall block in front of it. We then observe an obstacle avoiding behavior to avoid the wall and reach the prey.