Ernest IRL

Description:

Resources:

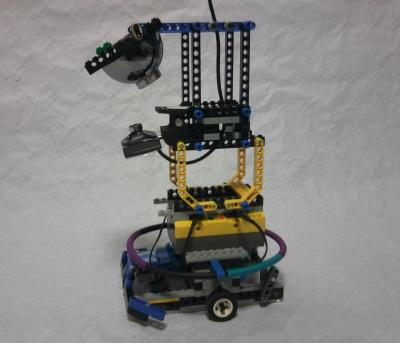

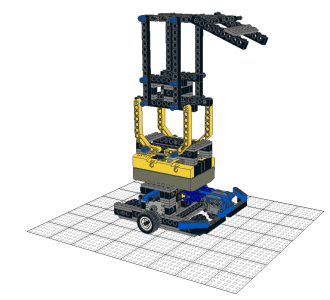

- Lego model of the robot (open with leoCAD) : robot.lcd

Note that non-Lego pieces (webcam, mirror and rubber bands) and flexible pieces are not represented.

- Lego model made with Blender : ernestIRL.blend

The components of the robot can be manipulated using the "empty" element named "Robot". The model proposes a camera parented to the camera model, which allows to simulate the image produced by the camera of the robot. To select a camera, select it with a right clic, then press

Ctrl+0.

- java-RCX interface: Interface.java

- nqc program: ernest.nqc

Under windows, the nqc program can be sent to RCX using Bricx Command Center (Bricxcc).

Under Linux, you have to install the "nqc" package, then open a console and type the command:

nqc -S"serial_port" -d "file_name.nqc"."serial_port" is the used port. In my case, I use a USB to serial converter (/dev/ttyUSB0).

Thus, the command is:

nqc -S/dev/ttyUSB0 -d ernest.nqc

Commands:

List of action commands: (message numbers were randomly defined)

- move forward of one step: 42. The robot returns 2 if it bumps, 3 if it stops on a blue square and 1 otherwise.

- turn left of 45° : 64.

- turn right of 45° : 128.

- turn left of 90° : 164.

- turn right of 90° : 200.

In the case of a rotation, the robot returns 1.

These commands can be sent with Bricxcc, with the provided java interface or with the command

nqc -S"serial_port" -msg "command" (for example, nqc -S/dev/ttyUSB0 -msg 42 ) under Linux.

Experiments:

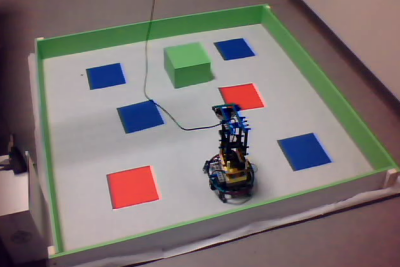

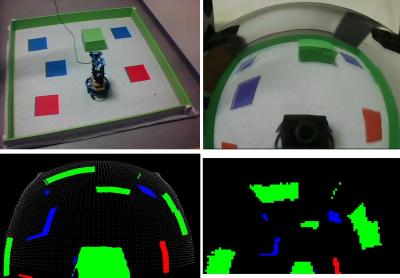

the robot demonstrates the robustness of our system as, even with an unreliable space memory and unprecise motors, the behavior of the robot is very similar to the simulated agent: the robot generates pertinent signatures of interactions and moves toward preys while avoiding walls.

We observe, on signatures of interactions, that the visible part of the robot (camera reflection) is not considered. Indeed, this element is always present in the visual field, and is thus not pertinent to predict the result of an interaction. The robot thus ''forget'' its camera. Another interesting observation is that when the battery discharges, and the step of the robot is shortened, signatures adapt to this variation. The model thus demonstrates a tolerance and adaptability to failures.