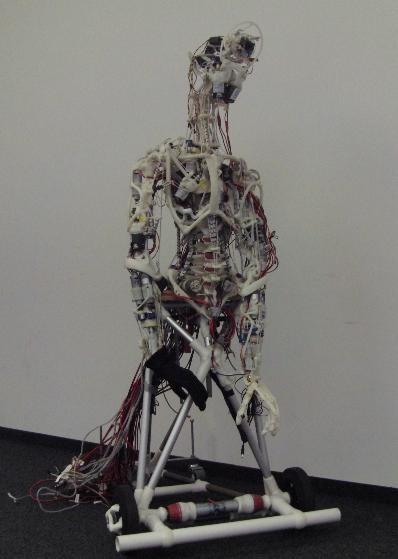

I worked on the robot ECCERobot during my final year research internship at the AI Lab of Zurich, in 2010.

my internship's thesis (fr)

EcceRobot

EcceRobot on theUniversity of Zurich's website.

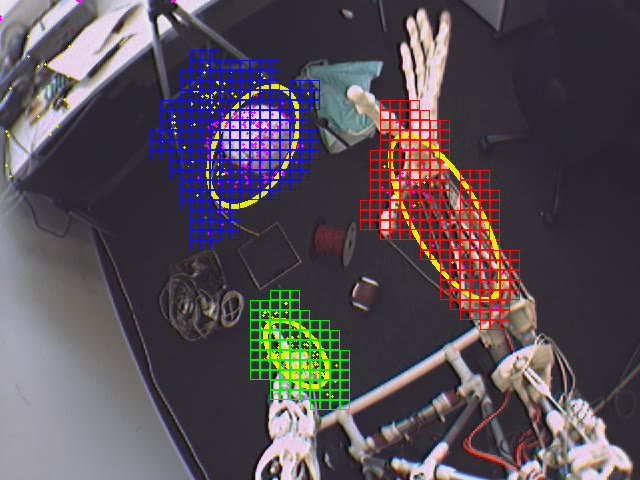

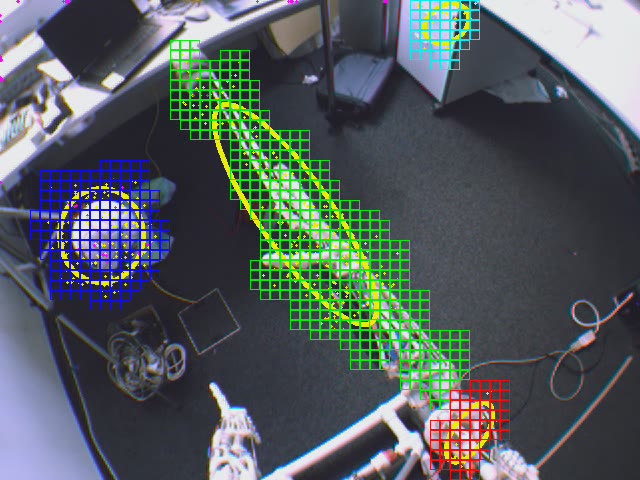

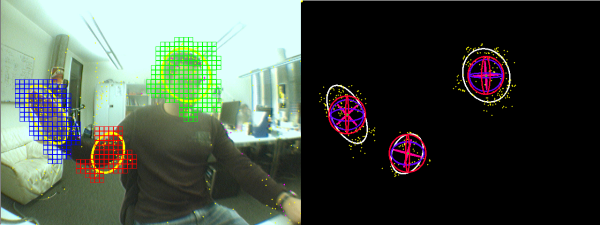

The segmentation and tracking system

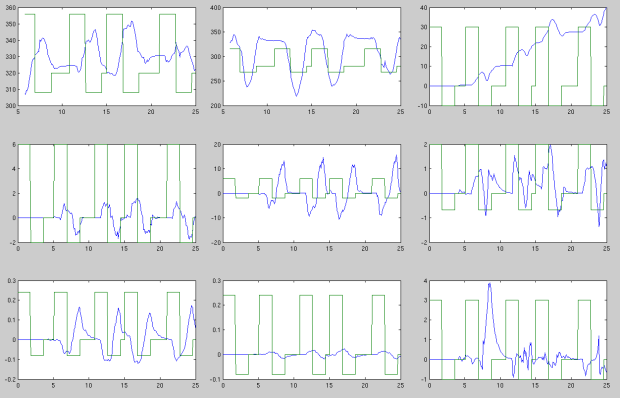

During my internship, I developed a segmentation and tracking system that can estimate translations and rotations of an object in space. This system is based on a two-levels multi-agent system: a first set of numerous agents track the image using an optic flow algorithm, and filter movements to remove noise. A second group of agents extract and manage data obtained with the first group, and manage the distribution of first group agents on the image. The system considers that a group of points of the image that move in a synchronized way belongs to a same object. The set of first level agents that detect an object are assigned to the analysis of movements of this object, under the supervision of a second level agent. This second level agent analyses the behavior of these agents to estimate the movements of the object (translation and rotation) in the three dimensions of space (c.f. internship's thesis for more details).

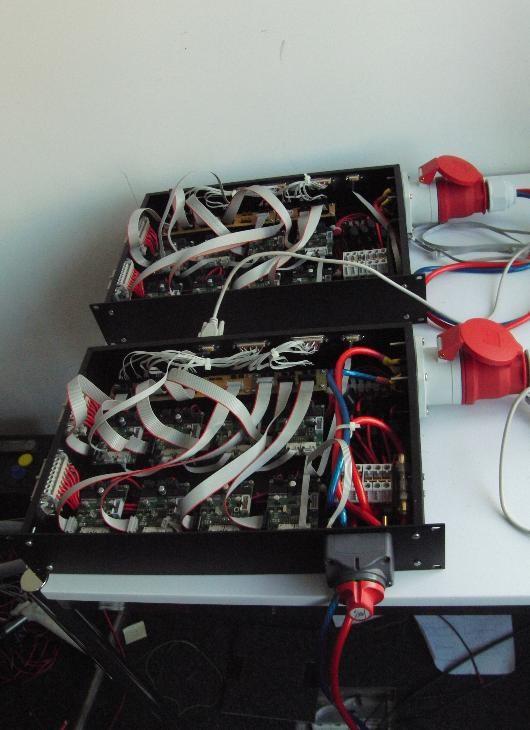

Experiments on the robot ECCERobot

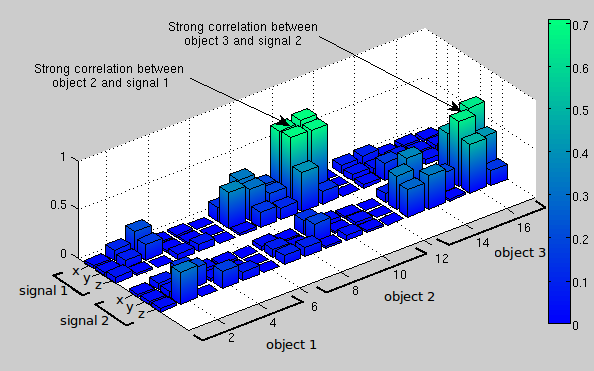

The aim of my internship was to detect which elements were part of the body of the robot. To achieve this, we compare movements detected by the tracking system and motor commands. A correlation between movements of an object and a motor command indicates that this object is a part of the body of the robot. It is even possible to define movements associated to each motor command.